Many road warriors we know all too well expect that

when they head to the airport to board their flight and attend to business they

will then return home safely. It happens all the time. For anyone that has

walked through an airport of late there is no doubting that the desire to get

back out into the world has returned. Gates are packed with check in and

security lines back to their former slow crawl patterns.

What is not expected is to see planes parked at the gates,

along taxi runways and worse. Who knew that a simple computer glitch could take

down the air traffic control network across the United States? When news of a

glitch spreads it almost never turns out to be a minor irritation. Of late we

have read about such glitches affecting

banks, trading houses and more – so much so that for the community at

large, it’s become almost a case of well, here we go again. But bringing air

travel to a halt, that’s a glitch on a completely different plain! Everyone it

seems is left hurting.

So what did happen? Was it a breach in security?

Ransomware? Someone flipped the wrong switch? Apparently it has something to do

with the database. As it was reported by CNN in an update provided January 12,

2023, it was A

corrupt file led to the FAA ground stoppage. It was also found in the backup

system. “The computer system that failed was the central database for all

NOTAMs (Notice to Air Missions) nationwide. Those notices advise pilots of

issues along their route and at their destination. It has a backup, which

officials switched to when problems with the main system emerged, according to

the source.”

Just this Friday, in a further update as reported by

BBC News in the article FAA outage: US airline regulators blame contractor for

travel chaos, “The FAA said that their contract employee, who was not

identified, deleted the files while working to synchronize the primary and

backup Notam databases.” Imagine that; a problem with synchronizing apparently

replicated databases. Once again, it can be said that it was human error but

then again, who was looking over the shoulder of this contract employee?

There is a reason why we don’t let individuals engage directly, with no supervision, with the maintenance of mission critical systems. There was a time when we talked about putting a big dog between operators and the console or, in the case of NonStop, two dogs. As I was reminded by NonStop technical consultant and longtime supporter of the NonStop community, Bill Honaker, it is important to remember that steps can be taken to avoid human error.

At a customer that was upgrading all of their NonStop

servers while their app stayed available, there was a rule that “any time an

engineer was to shut down a cabinet, two people had to lay hands on it and

separately identify it as offline and ready to go down. And all my config

changes were reviewed by the other project manager.”

For the NonStop community, to reference the well-known charter of NASA during the Apollo Program, failure is not an option. NonStop systems have been running for decades without outages all the while masking outages, disaster or otherwise, such that the general public was never impacted. That is the true value of depending upon a fault tolerant platform that already comes with a built-in Plan B.

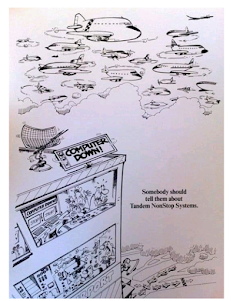

From

the archives (circa 1980s)

Courtesy Ceferino Perez, Madrid Spain

As appeared this week in LinkedIn

However, with the most robust application deployment on

NonStop there is almost uniform recognition that beyond fault tolerance there

is disaster tolerance. Natural disasters along with the efforts of maleficent

individuals or institutions impacting operations have become almost routine

occurrences. The need for a second and perhaps even a third (or more)

geographically separated site, in case the primary data center burns down, has

taken on even greater importance. With this in mind, there is the need for a

broader Plan B than one solely focused on just a single system.

For the NonStop user the options are out there and

available from multiple sources. “Our business for nigh on four decades has

been all about ensuring disasters do not take down the operations of any of our

NonStop customers,” said Tim Dunne, NTI’s Global Director Worldwide Sales.

“When you look at the complexity of today’s NonStop modern deployments, it is

of paramount importance that recovery can be achieved in real time to where the

impact on business goes unnoticed.”

NTI is among a group of vendors who have specialized in

ensuring data centers relying on NonStop aren’t the subject to outages for any

reason. It would be so easy for all involved in NonStop to caste stones at

those IT organizations not as robust as what is delivered with NonStop but are

their vulnerabilities in the way NonStop systems are engaged in dialogues with

one another? Are we really sure that when that switch is flipped, it will all

work?

“We know that many of our customers do indeed have

procedures to ensure their backups are up to the task,” said Dunne. “We have

helped many NonStop customers develop plans that take advantage of NTI’s DRNet®/Unified product suite and yes, we continue to provide this service as

part of our License agreement; as much as necessary without ever charging a

Professional Services Fee. NonStop customer support of our

Active/Active option has been experiencing significant growth over the past

decade.”

From firsthand experience, Dunne also noted the value

that comes with direct line of sight with those implementing a broader approach

to Plan B. “Having embraced Change Data Capture (CDC) methodologies earlier

than anyone else NTI is experienced in ensuring whatever Plan B is being

considered can be delivered. Furthermore, as the need for simplified approaches

to software maintenance and upgrades remains a priority, there is emerging

growing trend in support of not only robust solutions but of those that are

economically viable.”

My discussions on the subject of primary and backup

systems and databases was not limited to just NTI. When the experience at FAA

was raised with Bill Honaker, his response was to the point. “Many customers

spend the extra effort to run their sites as Active/Active. To do a

‘transparent’ switchover, that’s the best way,” said Bill. “IT organizations

may say that because the NonStop platform is ‘old’ (from the 90s) it’s risky

but a lot of us have experience with handling this kind of environment dating

back to those times. It is all about designing for fault tolerance,

everywhere (Power, Network, Switches, Routers, Servers, Database).

Companies (and agencies) don’t know it’s possible because of the ‘good enough’

mindset that pervades the industry, in my opinion, so they don’t even try.”

As for the view of others who provide services

supporting disaster recovery, when I reached out to Collin Yates of TCM

Solutions, he made an even stronger case for involving experienced personal in

creating working Plan B configurations. “Being able to switch from one site

(one DC) to another seamlessly to the users, especially in a 24x7x365

environment, comes down to two factors really. The first being that the

application in question is running in an Active/Active (A:A) configuration.

That is to say transactions are being processed on BOTH nodes simultaneously,”

said Collin.

“As for the second factor, it’s all about customers

having a very good and reliable Database Replication (DR) product installed

that supports the A:A Application. On the NonStop that could be DRNET,

Shadowbase or Oracle’s Golden Gate as the main protagonists. In a nutshell,

running the right platform (NonStop of course) with right Data Replication

engine (DRNET or Shadowbase) things like the outage at the FAA would or should

not ever occur,” observed Collin before adding, “We are experts in Data

Replication and configuring such in either A:A or Active / Passive (A:P) on

your NonStop platform.” And yes, part of the services is to ensure there are

steps in place to test the processes all work to plan.

Perhaps that is the most important aspect of all for

the NonStop community. Access to a generous mix of services providers,

consultants and software vendors ensures that no Plan B, whatever the

complexity, need give rise to anxieties over how best to ensure your most

mission-critical application of all can continue serving the business through

any disaster scenario imaginable. It isn’t a simple deployment to get right –

there are all sorts of potential collision scenarios to work through – but the

experience gained from real world deployments has made even the most unlikely

of occurrences manageable.

And when it comes to ensuring primary and backup are

indeed synchronized the NonStop community is uniquely blessed by the presence

of industry-leading, usable, software products that do exactly that. Think

TANDsoft with their high-performance FS Compare and Repair utility. There is no

reason to leave to chance and to inexperienced technical staff any opportunity

to perform operations that could lead to events such as that experienced by the

FAA.

The FAA gained considerable notoriety over the failings

and the manner in which they went about explaining what happened only made the

situation worse for them. The press was particularly unkind to the way they

went about solving the problem. For the NonStop community even as the decades

continue to slide past, having a fault tolerant platform on hand as a starting

point and with access to software and services that minimize the potential for

such outages to occur, Plan B is never an afterthought but an integral part of

what makes NonStop the robust solution it is today.

Comments